Machine Learning

Edge computing on FPGAs

Machine Learning is the state of the art approach to solve problems in many sectors, starting from Industry 4.0, car assistance and autonomous driving, medical technology, financial forecasting up to virtual personal assistants. The terms Artifcial Intelligence, Machine Learning and Deep Learning are not new to subject computer science as it’s concepts have already been introduced the late 1950s. But the exponential growth of processing, storage capabilities and the expansion of cloud services caused machine learning projects to grow in the last decade.

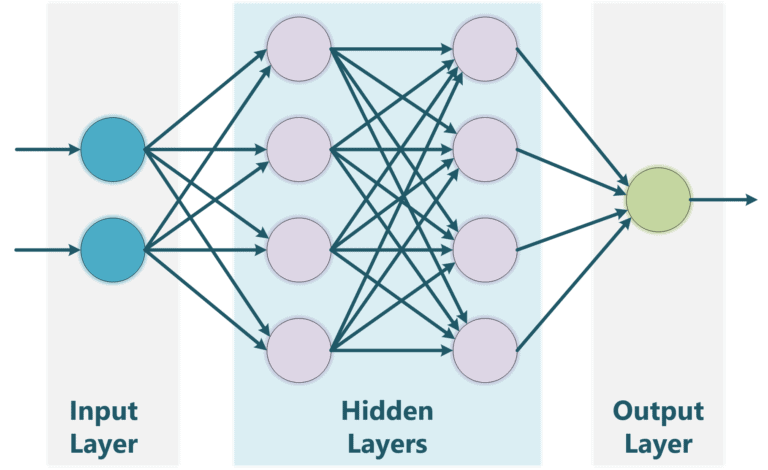

- Machine learning is a subfield of artificial intelligence that enables a machine to learn and improve through experience. The concept is derived from the way the human brain works.

- Its neurons are arranged in layers called input layer, hidden layers and output layer where each neuron has connections to its local neighbors

- The dependencies between neurons are described by a weight factor.

- To make the computation of a multi-layer neural network feasible the subset Deep Learning was introduced.

In the machine vision market Deep Learning is used mainly for image classification and object detection. To case the shown model into a classification example of a traffic stop sign one can imagine the functions of the deep learning layers as follows: The first layer represents a row of raw pixel data from a camera which passes pixels with a specific brightness to the next hidden layers. The hidden layers are extracting features such as round or rectangular shapes which are then combined to more complex structures, such as letters (S, T, O, P) or the outer shape of the sign. Finally at the output layer a prediction is made. The “depth” of a Deep Neural Network (DNN) is derived from the number of layers. A typical DNN comprises 100 to 1000 layers depending on the network model.

Edge computing on FPGAs?

The execution of DNNs can be either done in real-time on edge devices or remotely in the cloud. Depending on the application it is not always desirable to be online all the time, because the network traffic is higher and the latency is worse. Also cloud computing is less reliable as a loss of connection will result in a failure. On the other hand, DNNs on edge devices must be optimized at a higher degree because computing resources and power dissipation are more limited. But as a benefit edge devices offer deterministic real-time latency, lowest network traffic, highest data security and reliability.

Performance of NATvision products is achieved by using embedded FPGA accelerators. The resulting units have an industrial form factor and can be aggregated to reliable high power processing racks with many camera devices.

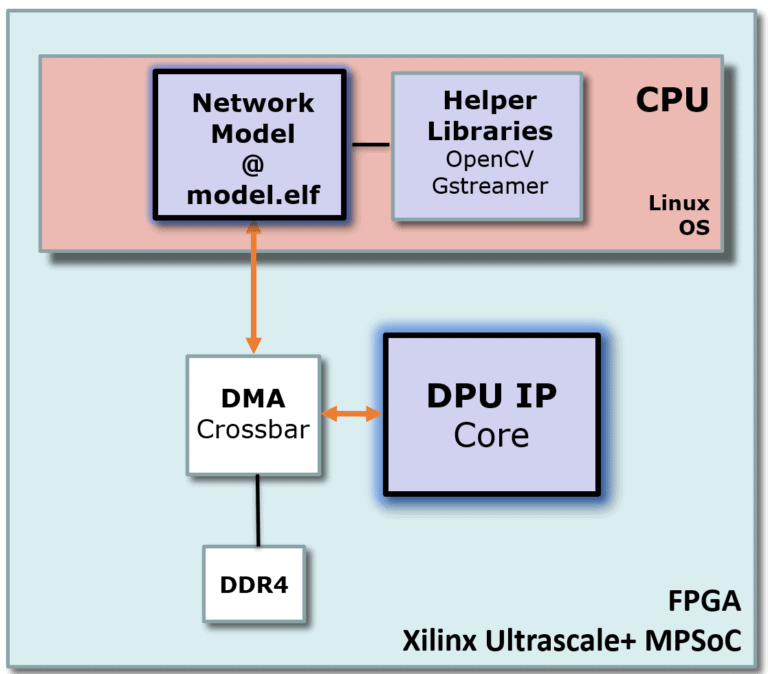

The heart of the processing platform is a System on Chip (SoC) which consists of programmable Logic (FPGA) and a quad core ARM processor (A53) in a single silicon. This architecture is ideal for machine learning applications as it brings both the required flexibility and the performance by having both units connected in a very efficient way by internal low-latency DMAs.

How to use this architecture for neural network applications?

Typically neural networks are described in C, C++ or Python code for execution on a processor device. Linux as an operating system offers useful tools like gStreamer and OpenCV to prepare the input and output data for the neural network, such as image scaling, labeling, box drawing and network streaming. However, for the required convolutional operations by neural networks, CPUs cannot be used as efficiently as FPGAs

The solution is to perform the calculations by a Deep Learning Processor Unit (DPU) implemented as FPGA logic to achieve the required performance.

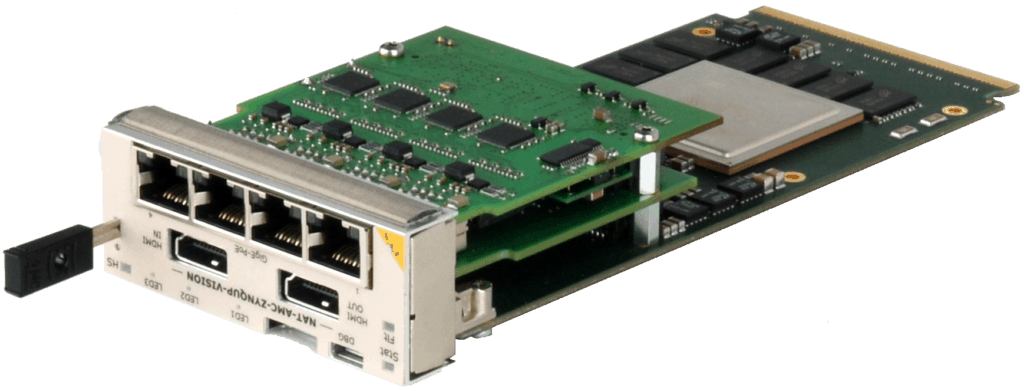

The NAT-AMC-ZYNQUP-VISION is an integrated hardware platform for machine vision and deep learning applications. It combines a Xilinx® Zynq® UltraScale+™ FPGA MPSoC with Power-over- Ethernet (PoE) switching and HDMI video interfaces to enable high performance algorithm processing.

- Xilinx ZYNQ Ultrascale+ MPSoC

- Quad GigE-Vision input camera interface with PoE

- HDMI 2.0 4K Input and Output

- Quad Channel DDR4-2400 for fast image buffering

- Optional: PCI-Express Gen 3.0 (x8) to external CPU Host-Card

How to get started?

Starting the development of an own neural network applications is not as hard as it seems at the first glance. Here is a step-by-step process you need to go through when starting the development of a neural network application from scratch. Basically the steps form the different phases of the process::

Choose your network model

The first step is to pick out a network model that fits to the end application. There are plenty of models available for image classification or object detection. Great models for object detection are YOLO (You Only Look Once) and SSD (Single Shot Detection).

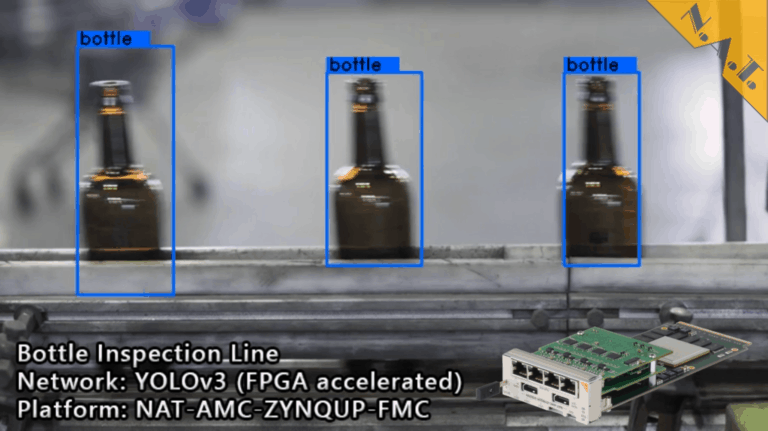

The right picure shows an YOLOv3 implementation on the NAT-AMC-ZYNQUP-FMC platform which is set up to detect and count bottles for line inspection purpose. The network is accelerated using the FPGA to achieve the desired performance on the input video.

In order to classify images, the models Alexnet (2012), Googlenet (2014) and Resnet (2105) can be used. These models are optimized to classify whole frames without supplying a position of the object. These networks can also be used for detecting abnormal states (anomaly detection). Typical application would be factory inspection, line clearance or evaluation of x-ray images in medical technology.

Choose your framework

Creating Deep Neural Networks requires a development framework. Many frameworks are available for free in open source form, such as Tensorflow, Caffe, Torch or Darknet. The decision on which framework to choose depends on the classification task of the end application. Some networks provide extensive functions, e.g. for feature extraction but might also be more complex to use. Regardless of one’s choice of framework, the design process is always the same. The following steps need to be done on a Host-PC, Workstation or Cloud Service:

Post processing and edge deployment

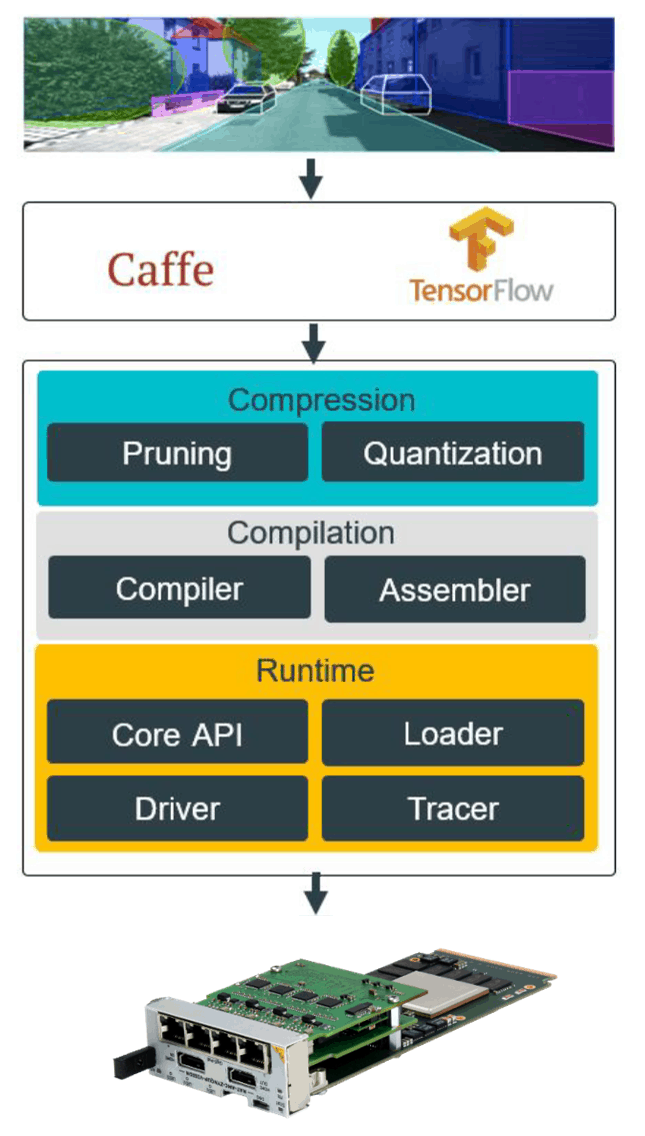

To archive the necessary performance on edge devices N.A.T. uses DPU accelerator kernels implemented in FPGA logic to release the intensive computing part from the CPU. In order for this approach to work the network model needs to be post processed and converted to the target architecture. For this purpose N.A.T. offers a toolkit called “Vision-AI Docker” which is provided as Docker container that guides customers through the conversion process. The usage of the Docker requires only minimal knownledge of Linux as it contains a complete environment with compilers and tools and makefiles installed. Conversion of the network and deployment of the end-application can be achieved using a few commands. The toolkit is based on the Xilinx DECENT (Deep Compression Tool) and contains elements to compress, optimize and deploy neural networks from Tensorflow or Caffe frameworks.

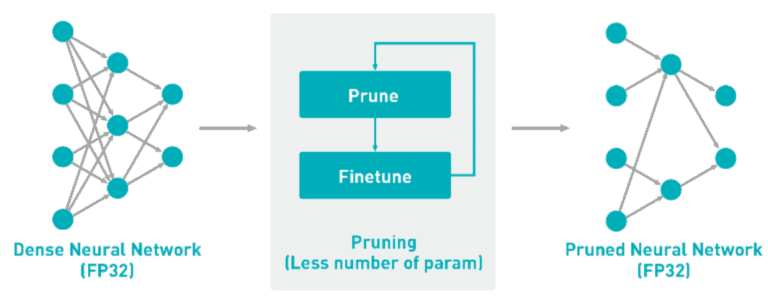

- Pruning: This is a form of DNN compression. It reduces the number of synaptic connections to other neurons so that the overall amount of data is reduced. This can help eliminate the redundant connections with minor accuracy drops for tasks such as classification. Both the memory and computational efforts are significantly dramatically reduced.

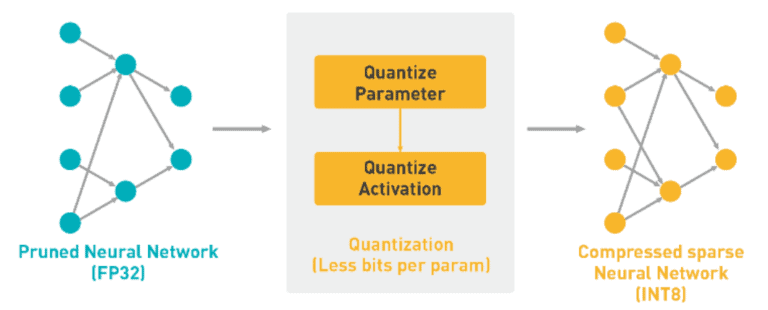

- Quantization: With this method floating-point numbers of the internal nodes are converted to the data format of the target architecture. By quantizing the model, the loss of precision and accuracy is negligible.

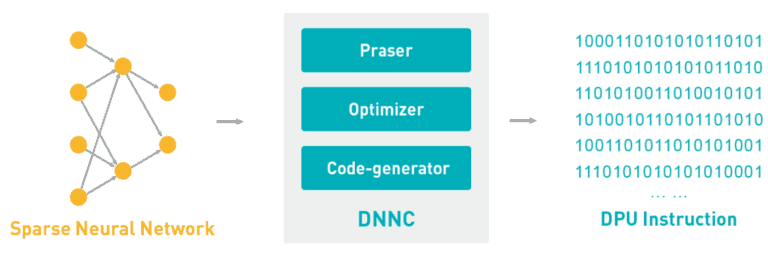

- Compilation: The compiler translates the neural network into a executable instruction set for the target DPU architecture.

Deploy application on target

In this step the necessary infrastructure around the neural network is created. This includes the instantiation of the network in a C++ application context among the input and output image interfaces. Image interfaces can be e.g. a GigE-Vision camera source, and the output could be anything like a gStreamer pipeline, a file or a HDMI monitor.

The Vision-AI Docker comes with example applications for both object detection and image classification which can be easily modified according to customer needs.

Once the compiled neural network is linked against the application to an executable binary file for the target architecture it can be deployed to the target device.

References:

[1] Xilinx, DNNDK User Guide 168 UG1327 (v1.6) August 13, 2019