Previous

Next

NATvision - Accelerated Vision

Accelerated Vision is our synonym for high performance machine vision and deep learning solutions based on optimized FPGA algorithms for embedded applications. Our solutions are based on a vendor-independent modular open system architecture (MOSA) called MicroTCA. The 19-inch rack-mount systems provide infrastructure for clocking, triggering and synchronization of cameras without the need for external circuits. The redundant design of hot-swap capable infrastructure (switching, power supply and cooling) guarantees high availability and maintainability. Compared to traditional PC/Server-based computer vision systems our platform offers more flexibility, lower development costs and unlimited scalability with any number of cameras.

Depending on the end application, we solve image processing tasks with Artificial Intelligence (AI), OpenCV, Visual Applets® or combinations hereof. The algorithms are hardware accelerated by FPGAs which enables time critical execution on the edge device. This results in a latency advantage, which is particularly suitable for real-time applications.

- Complete – a complete environment made of hardware and software building blocks, including hardware accelerators, systems, algorithms and enabling software.

- Modular – fexible combination of vision technology with industrial I/O like AD/DA converters, sensors and triggers

- Flexible – easy adaption to changing application requirements by exchanging modular components without replacing the whole system.

- Scalable – any number of cameras and frame grabbers, any size of system.

- Performance – the capability of FPGAs for massive parallel computation of algorithms accelerates any kind of image processing application.

- Open – the open standard hardware platform guarantees interoperability of components from multiple vendors.

Applications

Vision inspection

- Line inspection and quality control

- Railway inspection

- PCB assembly inspection

- Line clearance

Analysis and merging

- Medical picture analysis and merging

Video – public safety

- Traffic monitoring

- Public safety

- Filtering and analysis

- Camera link aggregation

- Compression and transcoding

Previous

Next

The Environment

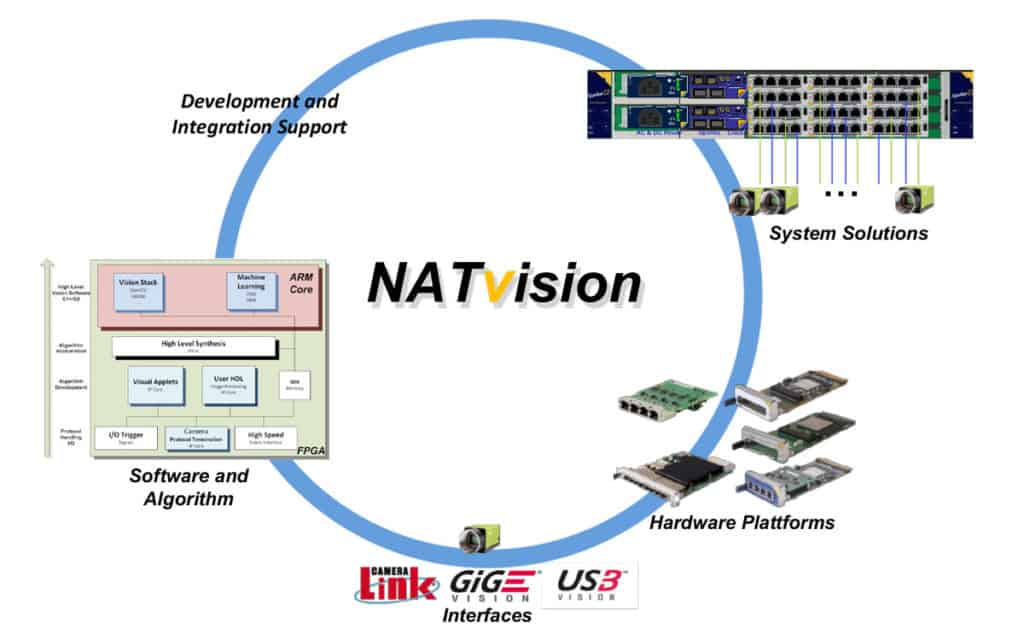

NATvision is a complete environment for the development and deployment of sophisticated image and video processing applications. The environment consists of a broad range of hardware products combined with selected software solutions and engineering support from N.A.T.

Designed as a machine vision processing platform, NATvision offers higher performance and lower development costs than comparable solutions, with unlimited scalability and number of cameras. The NATvision technology uses today’s state-of-the-art FPGA resource boards, combining the performance and real-time advantages of FPGA based algorithms with software-based image processing.

- Flexible and scalable system architecture

- Complete software suite

- Frame grabber boards

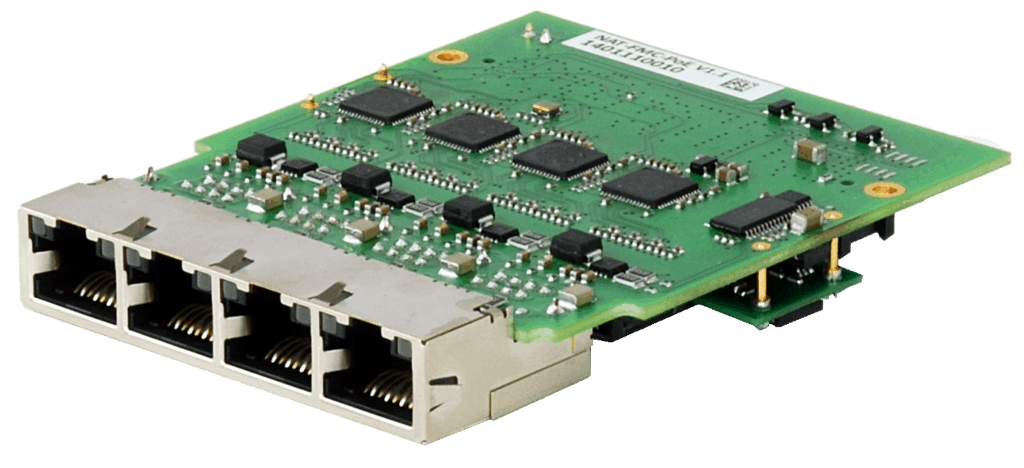

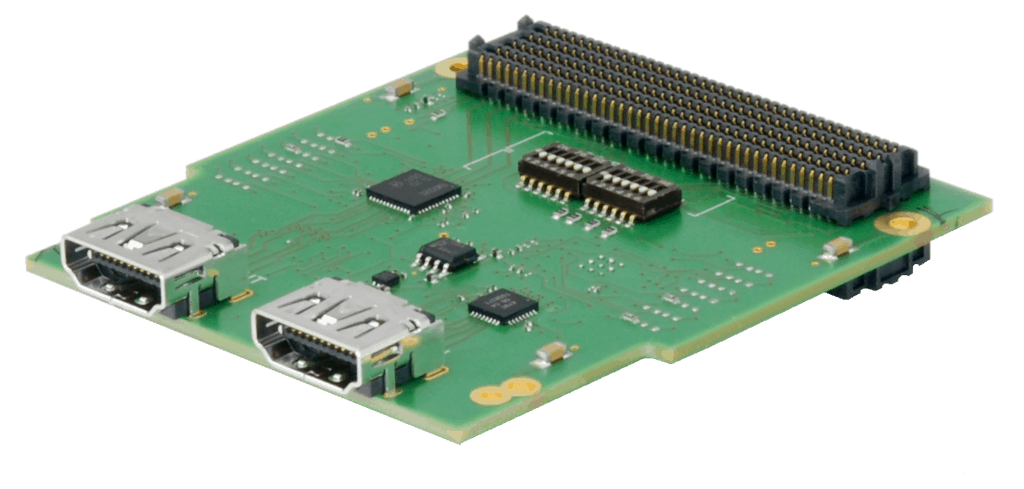

- Camera interfaces such as GigE-Vision, USB and Camera Link

- Development and integration support by N.A.T.

Industrial System Standard

MicroTCA: Open - Scalable - Flexible - Modular - 19"

NATvision systems are based on the flexible and scalable 19” industry standard, MicroTCA (Micro Telecommunication Computing Architecture). This modular open system architecture (MOSA) is a perfect fit for all kind of vision applications due to its high-performance board-to-board communication capabilities. It also includes circuits for synchronizing and triggering external cameras. As a modular system architecture, MicroTCA can be easily adapted to your specific application needs.

Hardware Advantages

- Flexible combination of FPGA, GPU and CPU boards

- Unlimited scalability and number of cameras

- High bandwidth interconnects through MTCA backplane

- Integrated synchronization and trigger

- Hardware acceleration by FPGA boards

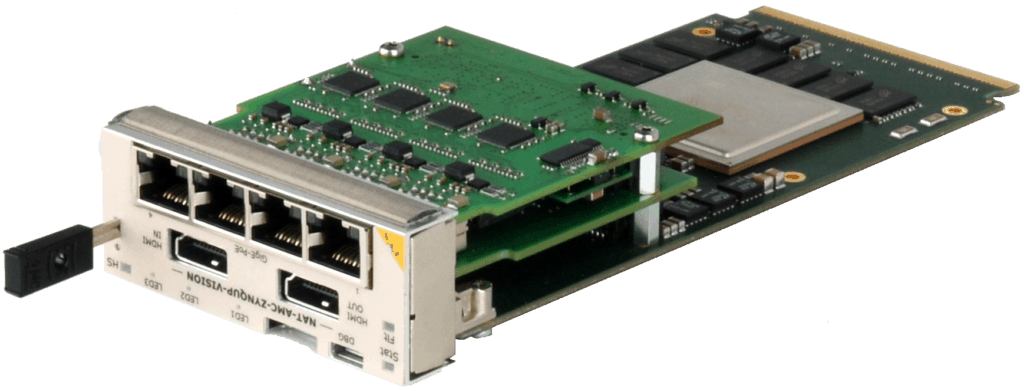

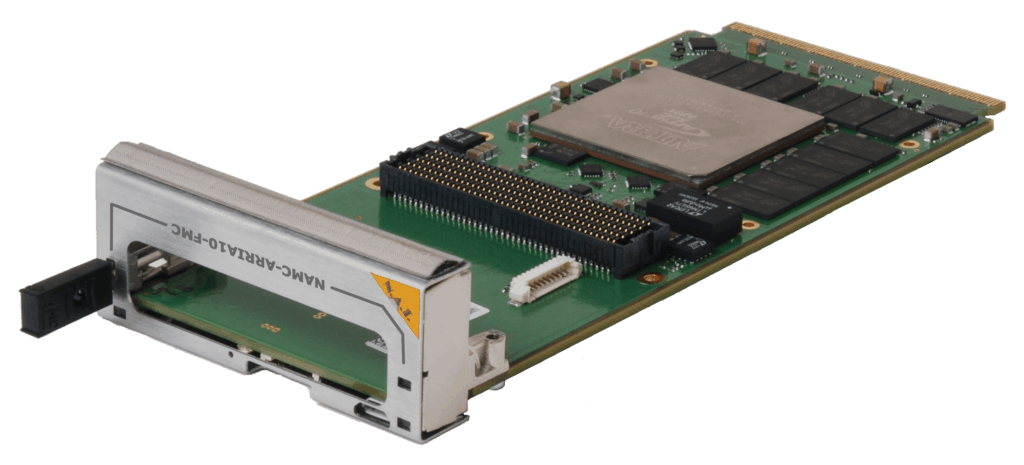

Framegrabber and Processing Boards

Expandable with FPGA Mezzanine Cards (FMC)

An integral component of any vision application is a powerful image processing board (frame grabber). Within NATvision, this functionality is delivered by advanced FPGA technologies provided by integrated circuits from Xilinx or Intel. Depending of the complexity of your application, you can choose from a range of frame grabber boards of different sizes and complexity.

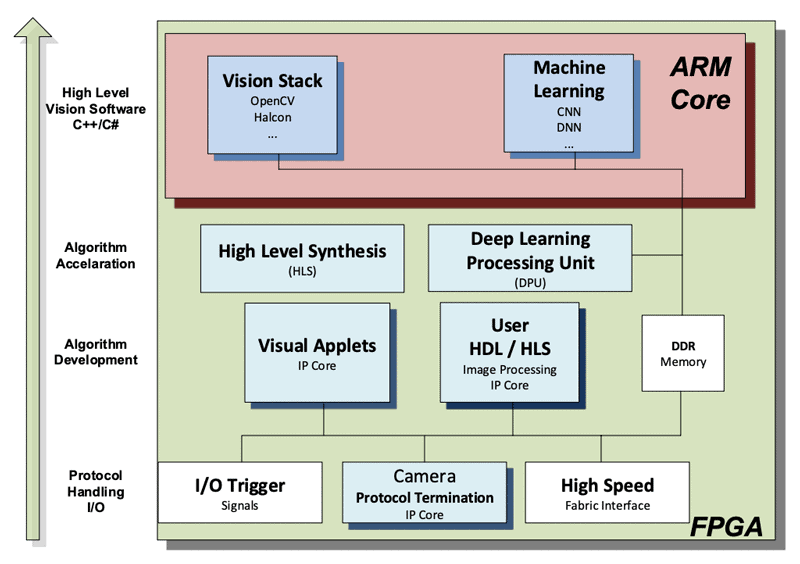

The Software Architecture

The NATvision software architecture provides a universal development platform for your solution. Depending on the type of developer, one can either develop algorithms completely graphically with Visual Applets or program the FPGA on RTL level. The hybrid structure of processor and FPGA allows an efficient combination of C++ code and hardware acceleration. For example, use the HLS toolbox and accelerate OpenCV algorithms with the FPGA. For the implementation of deep learning convolutional networks (CNN, DNN) for object detection and classification we support the conversion of TensorFlow and Caffe based networks to our platform.

- Deep Learning (CNN, DNN)

- Support for state of the art frameworks: Tensorflow and Caffe

- Develop algorithms graphically with Visual Applets

- High Level Synthesis: Source out processing power into FPGA

- Develop or reuse high optimized HDL algorithms

- OpenCV processing library for CPUs

- Protocol handling of industry standard camera interfaces

Machine Learning

Using NATvision hardware, machine learning applications are implemented accurately and cost-efficient. For the implementation, we rely on hybrid CPU/FPGA technology, which offers significant advantages compared to conventional systems. Our systems calculate results in real time on the latest FPGA technology and guarantee a level of accuracy and availability.

- Edge device computing ensures real-time latency and highest reliability.

- The extensive calculations of a neural network are outsourced to the FPGA.

- The FPGA ensures the overall performance of the application.

- The hybrid system architecture made of FPGA & CPU ensures maximum flexibility.

Development and integration support by N.A.T.

The services of N.A.T. range from providing the bare vision hardware components only up to delivering complete and individually tailored turnkey solutions. If you have a machine vision application in mind, talk to us.

- Hardware

- Firmware

- Development and integration support

- Consulting

- Customer specific software development and services

- Turnkey solutions